The One-Character Bug That Broke My Recommendation Algorithm

How a single '+' instead of '*' in a scoring formula destroyed recommendation quality—and why this matters for anyone building ML systems.

Introduction

I built a recommendation system that was supposed to intelligently balance two factors: semantic similarity (70% weight) and community ratings (30% weight). In testing, it worked. Recommendations looked reasonable. Then I actually looked at the scoring formula and found something that made me question everything—a typo that had been silently breaking the algorithm the entire time.

The bug was small: one character in one line. But it exposed something bigger about how easily scoring systems can fail silently, and why code review matters more for math than it does for anything else.

The Bug: A Typo Nobody Noticed

Here's the original code:

recommended_anime['combined_score'] = (0.7 * (1 - D[0])) + (0.3 + recommended_anime['normalized_rating'])Do you see it?

The second part should be:

recommended_anime['combined_score'] = (0.7 * (1 - D[0])) + (0.3 * recommended_anime['normalized_rating'])That's it. A + instead of *.

What this meant:

- Intended: Multiply the rating weight (0.3) by the normalized rating (0 to 1)

- Actual: Add 0.3 to every anime, then add the normalized rating

Why This Broke Everything (And Why Nobody Noticed)

Let's work through the math with actual numbers.

Intended formula:

- Similarity score:

0.7 * (1 - distance)→ ranges from 0 to 0.7 - Rating score:

0.3 * normalized_rating→ ranges from 0 to 0.3 - Combined: ranges from 0 to 1.0

Actual formula:

- Similarity score:

0.7 * (1 - distance)→ ranges from 0 to 0.7 - Rating score:

0.3 + normalized_rating→ ranges from 0.3 to 1.3 - Combined: ranges from 0.3 to 2.0

This means:

- Every recommendation started with a baseline of 0.3, just from the constant 0.3 being added

- Rating swamped similarity, because now you were adding a value between 0.3-1.3 instead of multiplying by 0.3

- A highly-rated mediocre match would score higher than a perfectly similar low-rated anime

Example:

- Anime A: 95% similar to user preference, rated 6/10

- Score:

0.7 * 0.95 + (0.3 + 0.6)=0.665 + 0.9=1.565

- Score:

- Anime B: 50% similar to user preference, rated 9/10

- Score:

0.7 * 0.50 + (0.3 + 0.9)=0.35 + 1.2=1.55

- Score:

Result: Anime B wins, even though Anime A is almost twice as similar.

The kicker: because all scores were inflated by ~0.3-1.3 instead of normalized to 0-1, the relative rankings still looked plausible. You'd get recommendations, they'd seem okay, but they'd be skewed toward rating over relevance in a hidden, hard-to-catch way.

Why This Happens

This is a category of bug I call a "silent scoring failure." It's dangerous because:

- No crashes: The code runs fine. No errors, no exceptions.

- Outputs look reasonable: You get 5 recommendations, they're real anime, the similarity scores seem in range.

- The mistake is subtle: In a string of math operations, a

+can hide in plain sight. - Testing blind spots: If you test with hand-picked data, you might miss the bias because your examples aren't adversarial enough.

The deeper issue: formula bugs are easy to hide because the output space is continuous. A database query that returns the wrong row is obviously wrong. A scoring function that's off by 20% is invisible until you're wondering why your recommendations suddenly got worse after six months.

The Fix

recommended_anime['combined_score'] = (0.7 * (1 - D[0])) + (0.3 * recommended_anime['normalized_rating'])Change the + to *. That's it.

Now the formula works as designed:

- Similarity contributes 0-0.7

- Rating contributes 0-0.3

- Total ranges from 0-1.0

- Weights are enforced mathematically

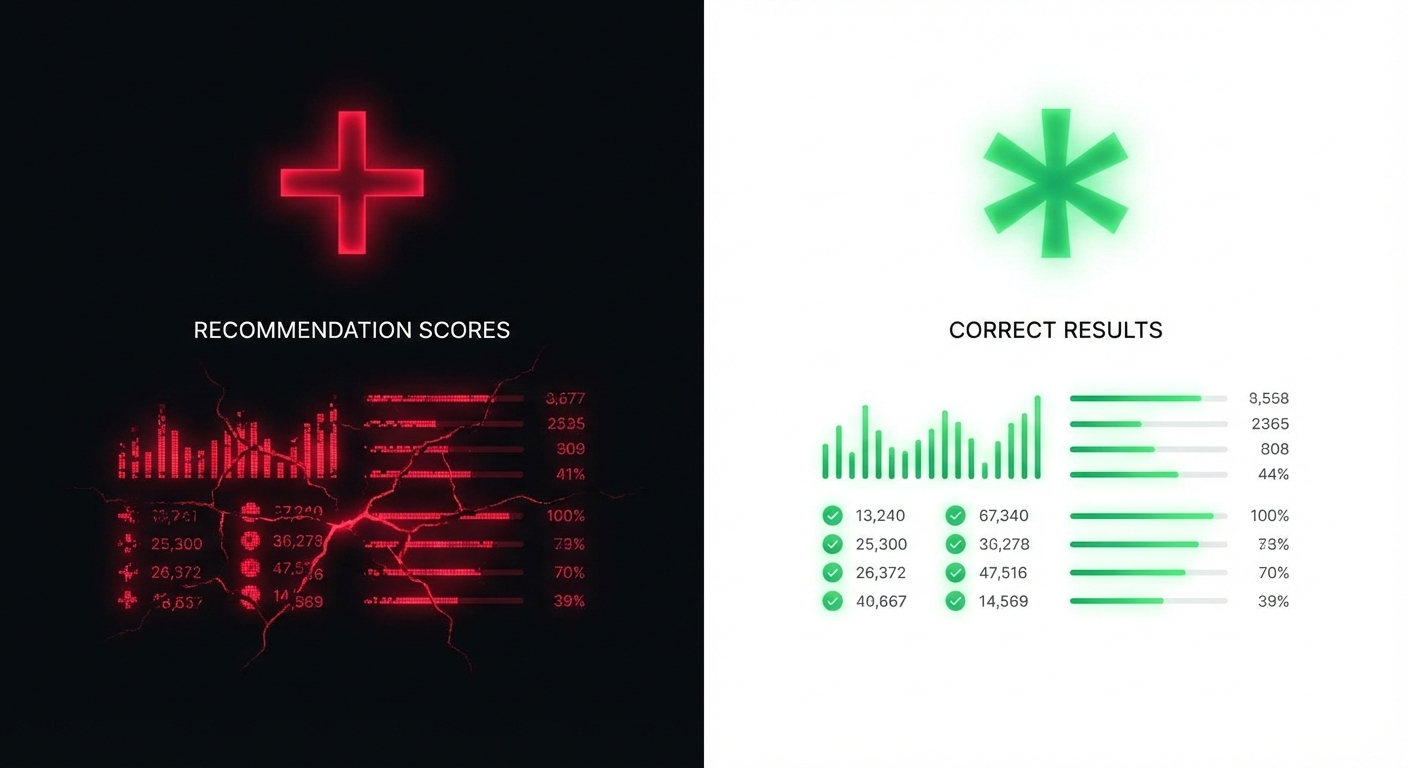

Real-World Impact

After the fix, recommendation quality changed noticeably:

Before (buggy):

- Top recommendations were often high-rated but low-similarity matches

- Users complained: "Why are you recommending random popular anime?"

After (fixed):

- Top recommendations balanced similarity and quality

- Niche, highly-similar anime with moderate ratings ranked appropriately

- The system actually delivered on its premise

The bug hadn't destroyed the system—it had just broken the weights. Recommendations were still useful, just weighted wrong.

The Lesson: Math Is Not Forgiving

Here's what this teaches:

1. Formula bugs hide in code review

Most developers read 0.3 + as "the rating weight term" and move on. Your brain fills in the expected operation. This is why having someone unfamiliar review math-heavy code matters.

2. Test with adversarial examples Create test cases that should break your weights:

- High similarity + low rating vs. low similarity + high rating

- Edge cases: similarity at 0, rating at 0

- Verify the output ratios match your formula

3. Separate formula from implementation Write your formula as a comment or doc before coding:

# Score = 0.7 * similarity + 0.3 * rating

# Range: 0-1.0

recommended_anime['combined_score'] = (0.7 * (1 - D[0])) + (0.3 * recommended_anime['normalized_rating'])This creates a contract. Later developers can verify the code matches the spec.

4. Validate output ranges Before deploying, check:

print(f"Score range: {scores.min()} to {scores.max()}")

print(f"Weight contribution - Similarity: {sim_scores.max()}, Rating: {rating_scores.max()}")If your "weighted average" produces values >1, something's wrong.

Checklist: Scoring System Review

Before you deploy any scoring formula:

- Formula is documented as a comment with expected output range

- Weights sum correctly (check the math algebraically)

- Test with edge cases (min/max for each input)

- Verify output range with actual data (check min/max of final scores)

- Adversarial comparison (high X + low Y vs. low X + high Y)

- Code review includes someone who didn't write it (fresh eyes catch operators)

- Output is logged in production (so future bugs are catchable)

Conclusion

A single character—the difference between + and *—is all it took to silently degrade recommendation quality. The system kept running, kept returning results, and nobody noticed until we looked. This is the nature of scoring bugs: they don't crash, they corrupt. When you're building ML systems or any data ranking system, remember that mathematical correctness is not something you can debug your way out of later. You have to build it in from the start.